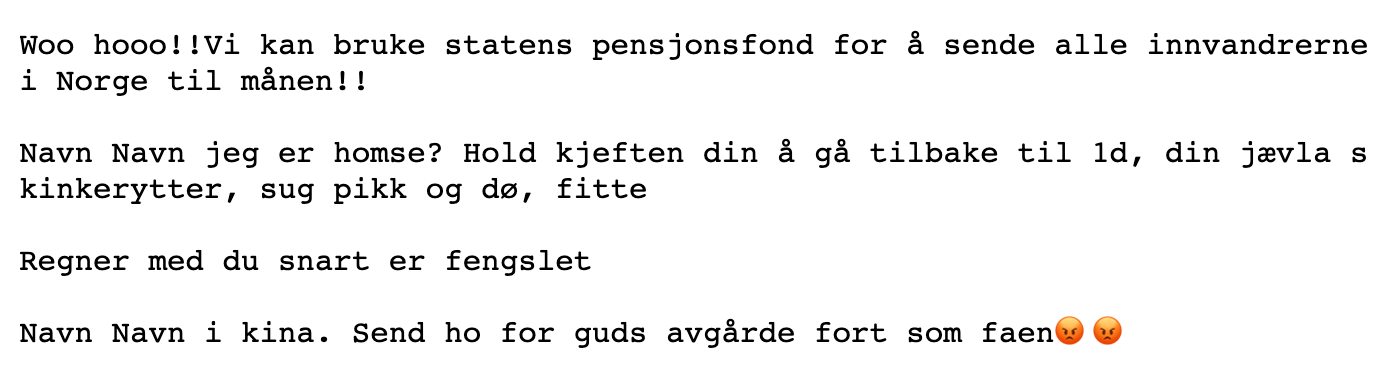

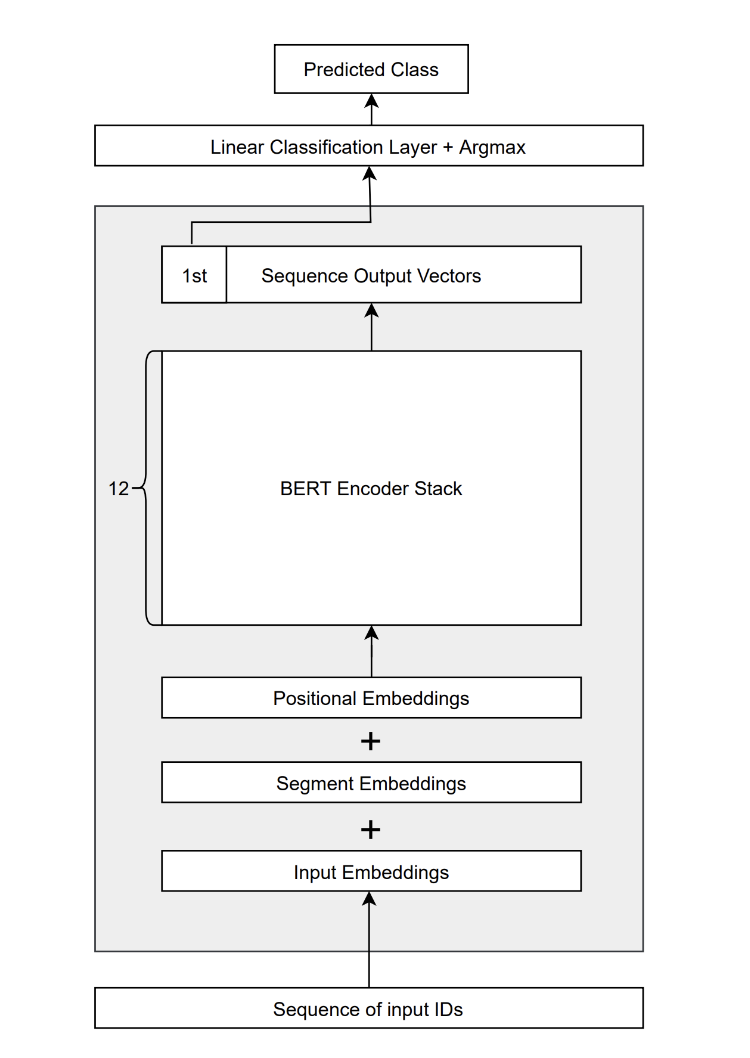

Computer science student Vilde Roland Arntzen uses the IDUN clusters in her master thesis study to detect offensive and hateful language in social media comments. Using the HPC clusters enables her to build complex transformer-based models that transfer knowledge from general language understanding learned from objective corpora such as Wikipedia, newspapers, and books to the specific task of separating neutral, offensive, and hateful social media comments. The model used is called BERT, which stands for Bidirectional Encoder Representation from Transformers and is a state-of-the-art language model.

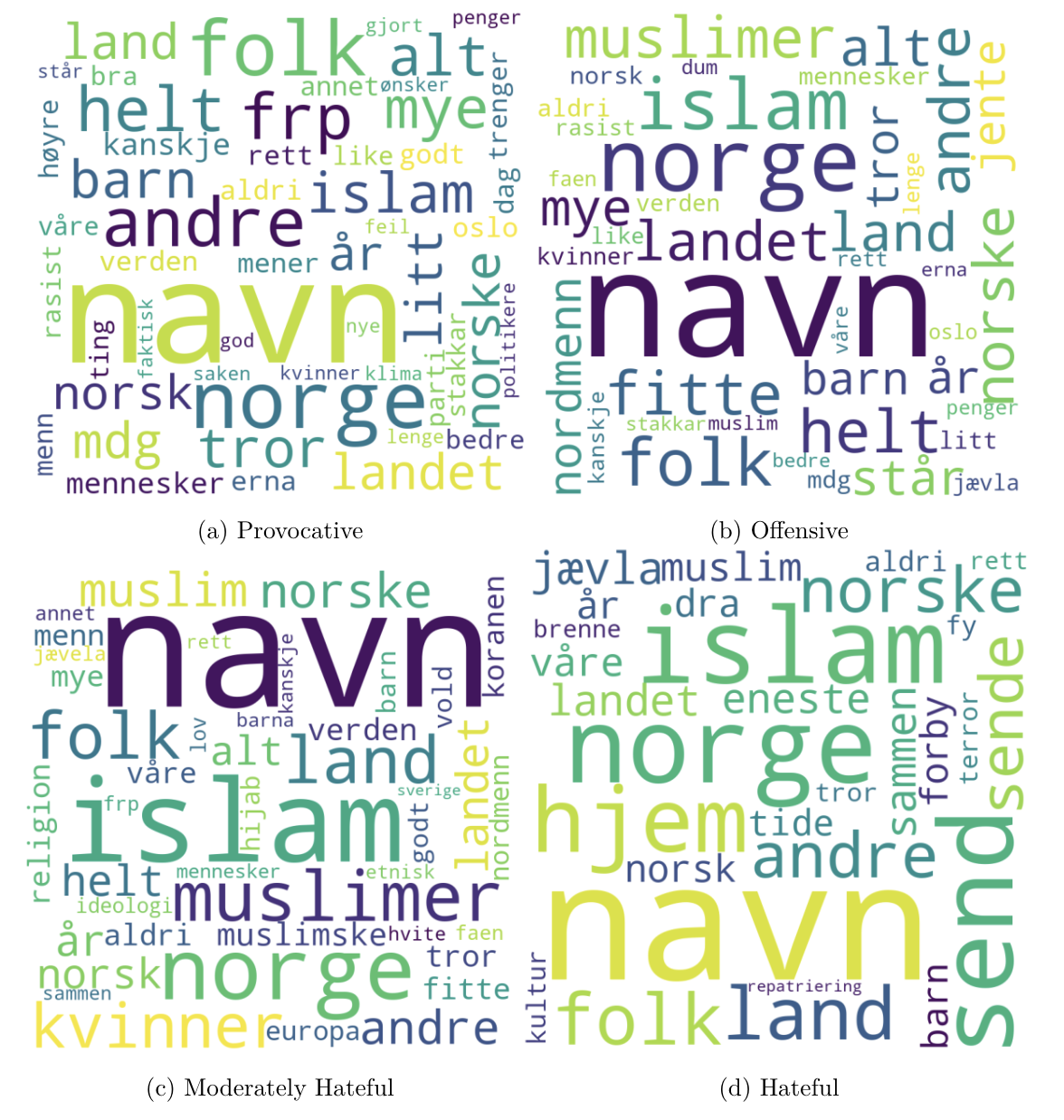

The difference between using more complex transformer-based models is that additionally to learning word occurrences, it can learn more complex contexts within the text. This makes a massive difference for hate speech detection, which is as dependent on context as the types of words used.

For example, in some cultures, it is more common to use curse words than in others, such as in the northern parts of Norway compared to the south. Although curse words are often used in some hateful comment types generally, a comment is not hateful because it contains a curse word. Likewise, a hateful comment does not necessarily contain any indicative hateful or curse words but can be expressed implicitly (E.g., through metaphors or sarcasm). Therefore, it is necessary to analyze other aspects of the comments. For instance, if it contains a targeted insult and whether the insult targets a group or an individual.