IDUN provides access to coding assistant and LLMs that is running locally on IDUN HPC cluster.

These LLMs are available (updated 2025-08-14):

| Model name | Purpose |

| openai/gpt-oss-120b | General purpose (recommended) |

| Qwen/Qwen3-Coder-30B-A3B-Instruct | AI coding assistant (recommended) |

| google/gemma-3-27b-it | General purpose |

| deepseek-ai/DeepSeek-R1-Distill-Qwen-32B | General purpose |

Usage statistics: https://ai.hpc.ntnu.no/stats

Introduction video

How to access

IDUN LLM models are available from NTNU network or NTNU VPN.

LLM models are available via plugins, web interface or API.

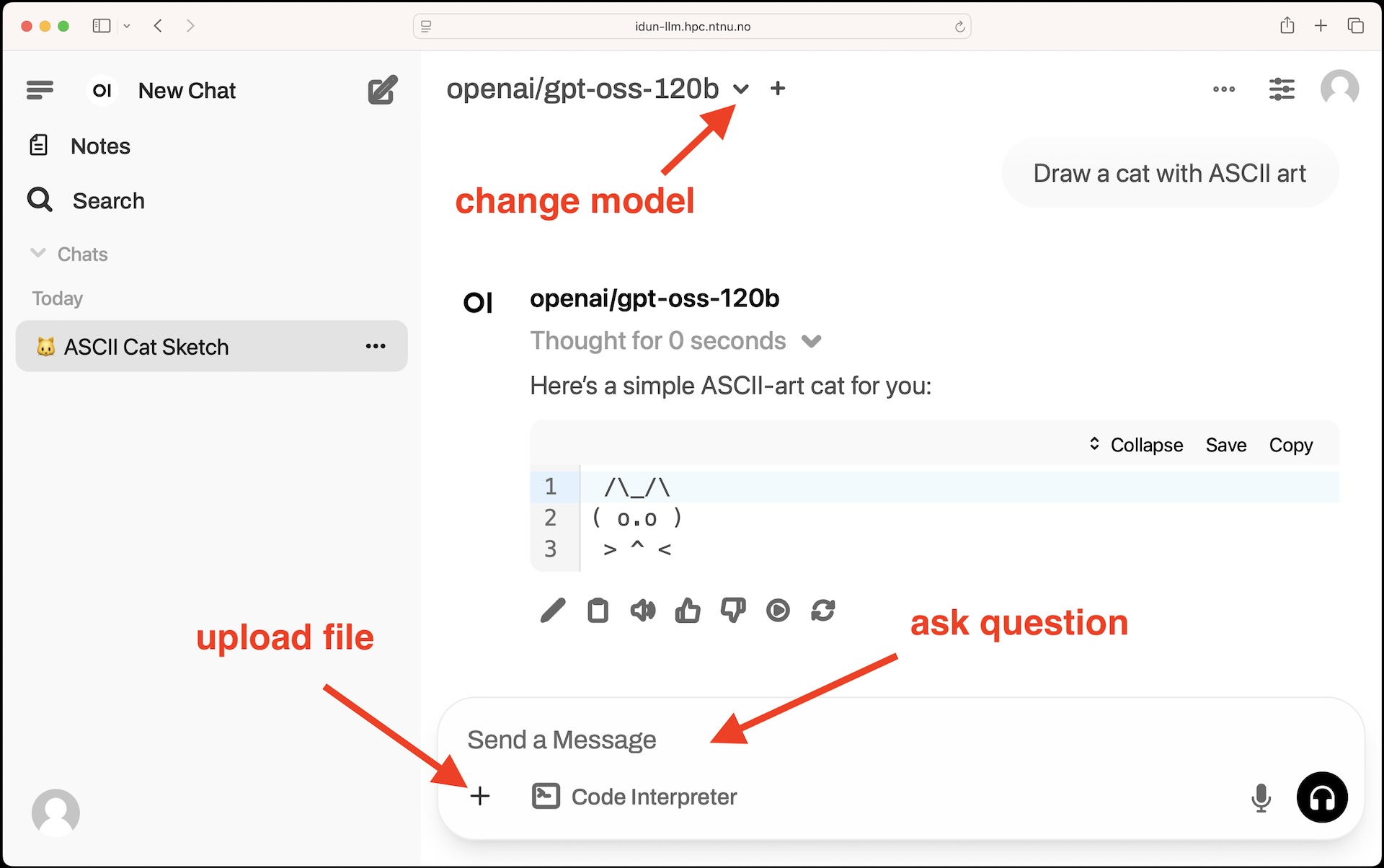

Web interface (Open WebUI)

Link: https://idun-llm.hpc.ntnu.no

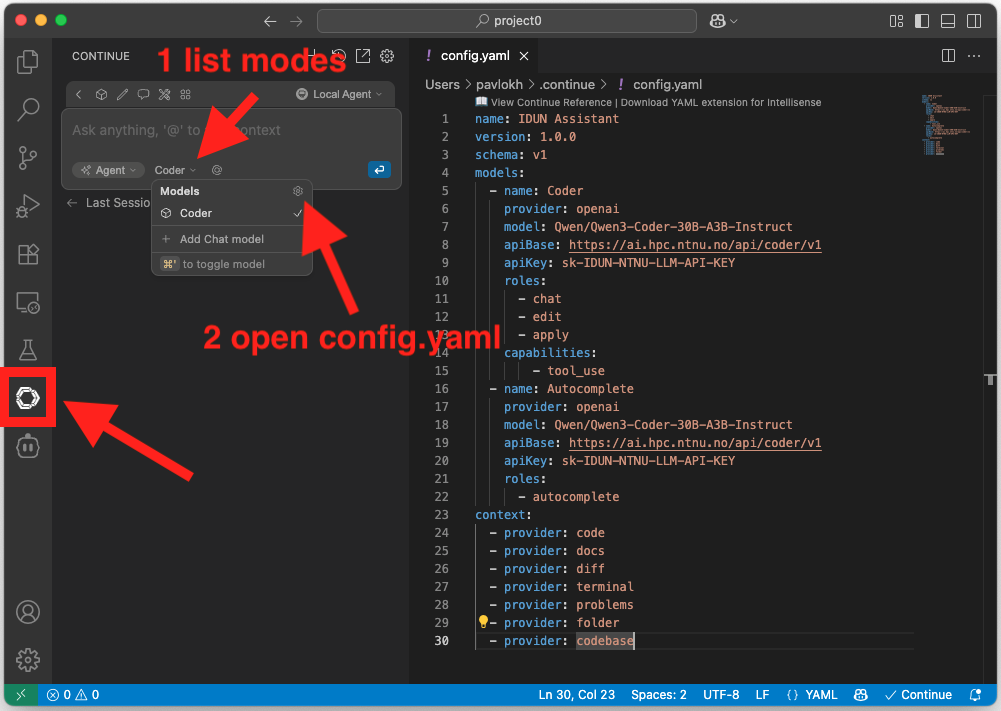

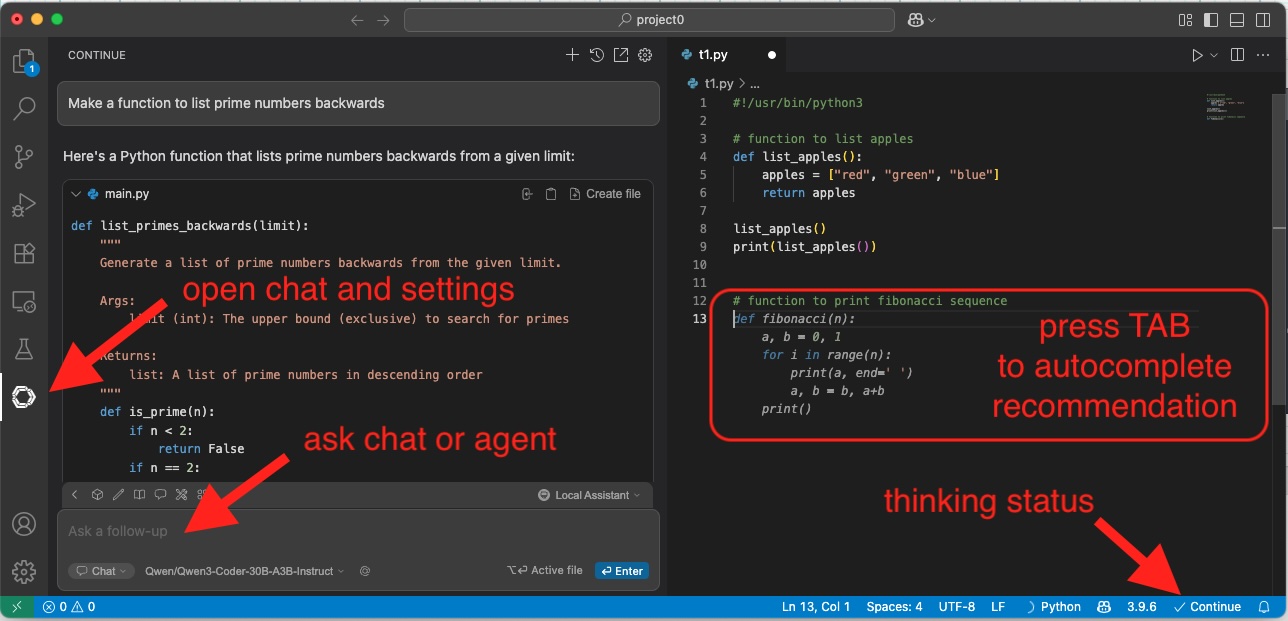

Visual Studio Code and Code Server ( plugin Continue.dev )

Start VS Code locally or Code Server ( VS Code web version) on https://apps.hpc.ntnu.no

Install extension Continue from VS Code Marketplace.

Add configuration file by clicking on gear icon in a chat model list (see screenshot):

Another way to add configuration: Create directory in your user directory ".continue" with a dot at the start. Create configuration file "config.yaml" in that directory.

File content:

name: IDUN Assistant

version: 1.0.0

schema: v1

models:

- name: Coder

provider: openai

model: Qwen/Qwen3-Coder-30B-A3B-Instruct

apiBase: https://ai.hpc.ntnu.no/api/coder/v1

apiKey: sk-IDUN-NTNU-LLM-API-KEY

roles:

- chat

- edit

- apply

capabilities:

- tool_use

- name: Autocomplete

provider: openai

model: Qwen/Qwen3-Coder-30B-A3B-Instruct

apiBase: https://ai.hpc.ntnu.no/api/coder/v1

apiKey: sk-IDUN-NTNU-LLM-API-KEY

roles:

- autocomplete

context:

- provider: code

- provider: docs

- provider: diff

- provider: terminal

- provider: problems

- provider: folder

- provider: codebaseMain elements (screenshot):

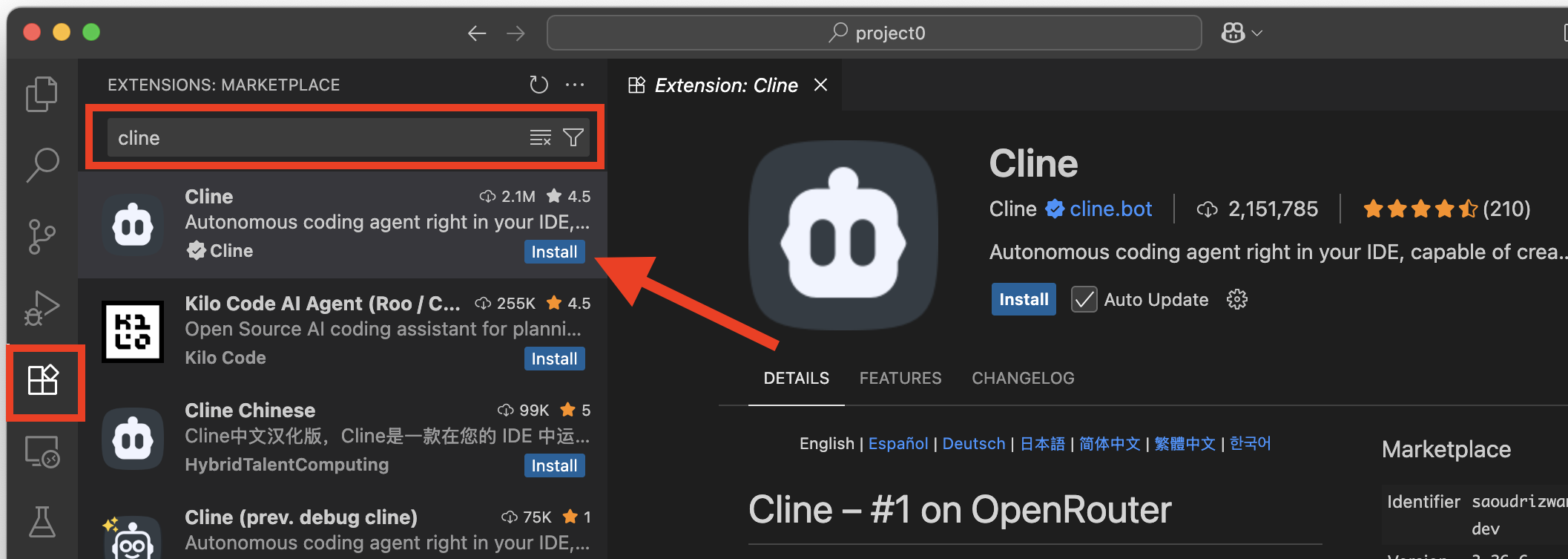

Visual Studio Code and Code Server ( plugin Cline )

Start VS Code locally or Code Server ( VS Code web version) on https://apps.hpc.ntnu.no

Install extension Cline from VS Code Marketplace.

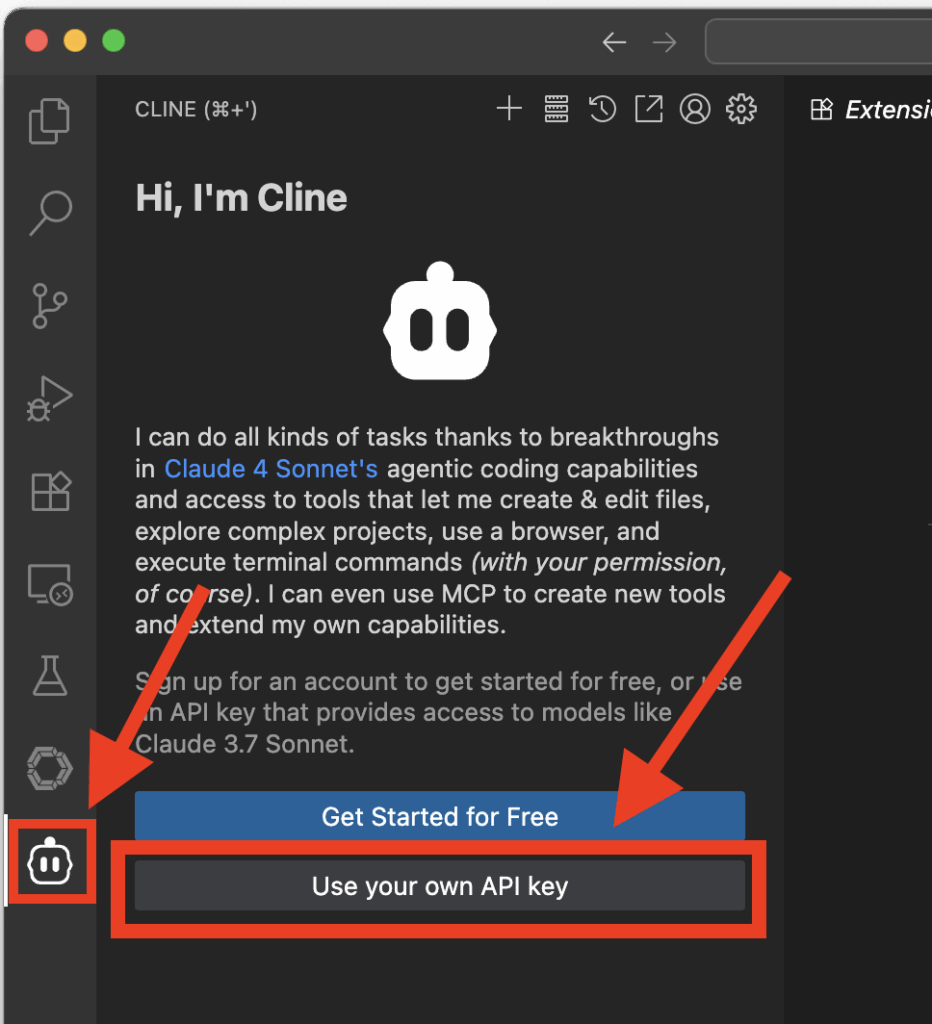

Add configuration file by clicking on "Use your own API key" (see screenshot):

Use this settings:

API Provider: OpenAI Compatible

Base URL: https://ai.hpc.ntnu.no/api/coder/v1

OpenAI Compatible API Key: sk-IDUN-NTNU-LLM-API-KEY

Model ID: Qwen/Qwen3-Coder-30B-A3B-Instruct

Visual Studio Code and Code Server ( plugin Roo Code )

Start VS Code locally or Code Server ( VS Code web version) on https://apps.hpc.ntnu.no

Install extension Roo Code from VS Code Marketplace.

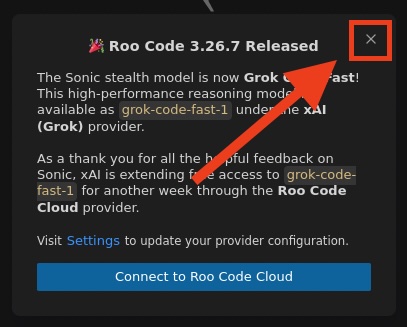

Use this settings to change settings (see screenshot):

API Provider: OpenAI Compatible

Base URL: https://ai.hpc.ntnu.no/api/coder/v1

OpenAI Compatible API Key: sk-IDUN-NTNU-LLM-API-KEY

Model ID: Qwen/Qwen3-Coder-30B-A3B-Instruct

There is not need to connect to Roo Code cloud, close request:

JupiterLab ( plugin Jupyter AI )

Install plugin Jupyter AI. Example with new Python environment:

module load Python/3.12.3-GCCcore-13.3.0

python -m venv /cluster/home/USERNAME/JupiterAI

source /cluster/home/USERNAME/JupiterAI/bin/activate

pip install jupyterlab

pip install jupyter-ai[all]Start JupyterLab via https://apps.hpc.ntnu.no

Change Jupyter AI settings:

Language Model

- Completion model: OpenAI (general interface)::*

- Model ID: Qwen/Qwen3-Coder-30B-A3B-Instruct

- Base API URL: https://ai.hpc.ntnu.no/api/coder/v1

Inline completions model (Optional)

- Completion model: OPenAI (general interface)::*

- Model ID: Qwen/Qwen3-Coder-30B-A3B-Instruct

- Base API URL: https://ai.hpc.ntnu.no/api/coder/v1

API Keys: sk-IDUN-NTNU-LLM-API-KEY

API

2 API options are available:

- Option 1 - Open WebUI API (Create personal API Key, Multiple models available, but function calling is not supported yet because models are served by vLLM)

- Option 2 - Direct vLLM API (function calling is supported, one public API key for all NTNU users, only 2 models are available now)

Option 1: Open WebUI provides API access to LLM models

Link: https://idun-llm.hpc.ntnu.no

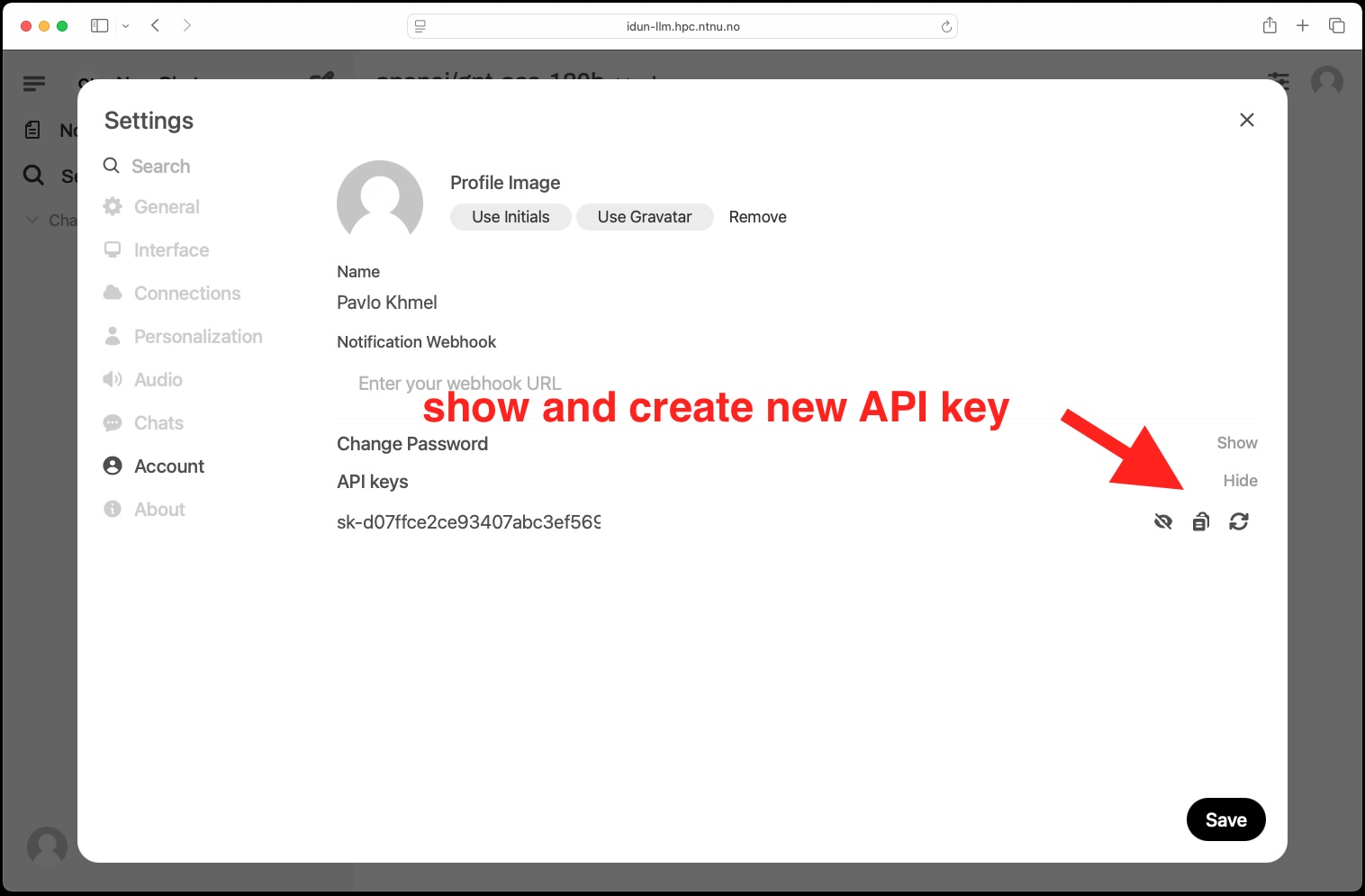

Create your Open WebUI API key.

Click [User Name] > Settings > Account > API keys in the Open WebUI

Example: Create Python script chat.py to use API:

#!/usr/bin/python3

import sys

import requests

def chat_with_model(token,model,question):

url = 'https://idun-llm.hpc.ntnu.no/api/chat/completions'

headers = {

'Authorization': f'Bearer {token}',

'Content-Type': 'application/json'

}

data = {

"model": model,

"messages": [

{

"role": "user",

"content": question

}

]

}

response = requests.post(url, headers=headers, json=data)

return response.json()

my_api_key = sys.argv[1]

my_model = sys.argv[2]

my_question = sys.argv[3]

answer = chat_with_model(my_api_key, my_model, my_question)

print(answer)Use:

python3 chat.py 'MY-API-KEY' 'openai/gpt-oss-120b' 'Why sky is blue?'Example with additional parameters (temperature, top_p, top_k, max_tokens, frequency_penalty, presence_penalty, stop sequences):

#!/usr/bin/python3

import sys

import requests

def chat_with_model(token,model,question):

url = 'https://idun-llm.hpc.ntnu.no/api/chat/completions'

headers = {

'Authorization': f'Bearer {token}',

'Content-Type': 'application/json'

}

data = {

"model": model,

"messages": [

{

"role": "user",

"content": question

}

],

"temperature": 0.6,

"top_p": 0.95,

"top_k": 20,

"max_tokens": 800,

"frequency_penalty": 0.9,

"presence_penalty": 1.9,

"stop": ["Galdhøpiggen", "Storsteinen"]

}

response = requests.post(url, headers=headers, json=data)

return response.json()

my_api_key = sys.argv[1]

my_model = sys.argv[2]

my_question = sys.argv[3]

answer = chat_with_model(my_api_key, my_model, my_question)

print(answer)Example output:

% python3 chat.py 'MY-API-KEY' 'openai/gpt-oss-120b' 'Make list. Mountain per line of the tallest mountains in Norway?'

/Users/pavlokh/Library/Python/3.9/lib/python/site-packages/urllib3/__init__.py:35: NotOpenSSLWarning: urllib3 v2 only supports OpenSSL 1.1.1+, currently the 'ssl' module is compiled with 'LibreSSL 2.8.3'. See: https://github.com/urllib3/urllib3/issues/3020

warnings.warn(

{'id': 'chatcmpl-85894db9e7174d4f9dc468d47c95b42f', 'object': 'chat.completion', 'created': 1756735493, 'model': 'openai/gpt-oss-120b', 'choices': [{'index': 0, 'message': {'role': 'assistant', 'content': None, 'refusal': None, 'annotations': None, 'audio': None, 'function_call': None, 'tool_calls': [], 'reasoning_content': 'User wants a list, each line containing a mountain name (presumably the tallest mountains in Norway). They ask: "Make list. Mountain per line of the tallest mountains in Norway?" So we need to provide a list with one mountain per line, presumably ordered by height descending. Should include maybe top 10 or more? Provide names and perhaps heights? The request is simple; no disallowed content.\n\nWe can respond with bullet points or just plain lines. Let\'s give top 10 highest peaks in Norway (including those on islands?). The highest is Galdhøpiggen'}, 'logprobs': None, 'finish_reason': 'stop', 'stop_reason': 'Galdhøpiggen'}], 'service_tier': None, 'system_fingerprint': None, 'usage': {'prompt_tokens': 84, 'total_tokens': 203, 'completion_tokens': 119, 'prompt_tokens_details': None}, 'prompt_logprobs': None, 'kv_transfer_params': None}List modes:

curl -H "Authorization: Bearer sk-55..YOUR_API_KEY..88" https://idun-llm.hpc.ntnu.no/api/modelsOption 2: API for 2 models with tool calling support and with public API key. API is served by vLLM:

- Qwen3-Coder-30B-A3B-Instruct

- openai/gpt-oss-120b

For model: Qwen3-Coder-30B-A3B-Instruct

API Provider: OpenAI Compatible

Base URL: https://ai.hpc.ntnu.no/api/coder/v1

OpenAI Compatible API Key: sk-IDUN-NTNU-LLM-API-KEY

Model ID: Qwen/Qwen3-Coder-30B-A3B-InstructFor model: openai/gpt-oss-120b

API Provider: OpenAI Compatible

Base URL: https://ai.hpc.ntnu.no/api/gpt/v1

OpenAI Compatible API Key: sk-IDUN-NTNU-LLM-API-KEY

Model ID: openai/gpt-oss-120bThis example will use Python module openai. First create Python virtual environment and install openai module:

module load Python/3.12.3-GCCcore-13.3.0

python -m venv venv-openai

source venv-openai/bin/activate

pip install openaiCreate file chat-tools.py with code example with tool calling:

import openai

import json

import datetime

client = openai.OpenAI(

base_url="https://ai.hpc.ntnu.no/api/gpt/v1",

api_key="sk-IDUN-NTNU-LLM-API-KEY"

)

def get_current_time():

current_datetime = datetime.datetime.now()

return f"Current Date and Time: {current_datetime}"

tools = [

{

"type": "function",

"function": {

"name": "get_current_time",

"description": "Get current date and time"

},

}

]

response = client.chat.completions.create(

model="openai/gpt-oss-120b",

messages=[{"role": "user", "content": "What's the time right now?"}],

tools=tools

)

# Process the response

response_message = response.choices[0].message

if response_message.tool_calls:

for tool_call in response_message.tool_calls:

function_name = tool_call.function.name

function_args = json.loads(tool_call.function.arguments)

if function_name == "get_current_time":

time_info = get_current_time()

print(f"Tool call executed: {function_name}() -> {time_info}")

else:

print(f"Unknown tool call: {function_name}")

else:

print(f"Model response (no tool call): {response_message.content}")Example output:

$ python3 chat-tools.py

Tool call executed: get_current_time() -> Current Date and Time: 2025-09-26 17:18:32.408908